When designing our mobile application testing strategy, it is important to consider that: it is not all about the devices – it IS about all the devices.

The distinction comes from the fact that it is not possible to “brute force” test all the combinations of devices and operating systems. And just not testing? That is not a prudent option either.

For a bit of visualization, take a look at:

-

- https://cdn.opensignal.com/public/data/reports/global/data-2015-08/2015_08_fragmentation_report.pdf

Our test strategy needs to be intelligent and thoughtful, the result of investigation, analysis and consideration, designed to drive us towards ‘good enough’ quality for our (business) purposes at a specific point in time.

We have to be smart about it.

All Are Not Equal Under Test

Whether we are testing an app that is for public consumption or one that will be only used by the business users within our company, we need information about those users and their requirements. Having operational data or specific requirements pertaining to what the hardware and mobile operating systems must be, or what is allowed to be, can go a long ways to prioritizing our testing. Additionally, understanding or profiling our users and their usage patterns will also provide valuable input.

From this information, these requirements, we can assume that we will find criteria by which we can prioritize the platforms we need to test our application upon.

Platform = specific device + a viable operating system for that device

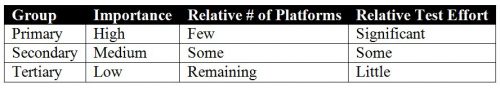

To meet our test strategy goal above, we will need to perform the appropriately responsible amount of testing on an appropriately responsible number of platforms. By merging our supported platform requirements with our user profiles and their usage patterns, we will be able to matrix sets or groups of supported platforms with amounts or degrees of testing effort.

Using an example of three groups, we might have a conceptual matrix like the following:

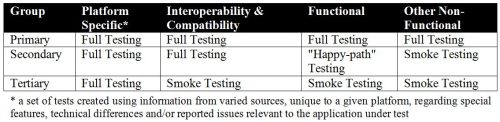

Then, for each group, we might define the types and level of testing in each as:

Note: We might also achieve further effort reductions, with little additional risk, by performing an analysis to identify “like” sub-groups of platforms that are so alike that we might reasonably select a single platform to test upon as the “sub-group representative”.

Process Multiplier Considerations

To manage the amount of test effort required for the project, we need to also be aware that the number of devices and operating systems can weigh heavily on some areas of our testing process.

For example, when isolating our defects, when they come back not-repro, or when re-testing them once they are fixed, do we:

-

- Check all the platforms to see where the bug is present?

- Just look at the platform where we found the bug?

- Check on one other “like” platform?

- Check on another “like” platform and an “unlike” platform?

- Or…?

The “gotcha” is, of course, the more platforms we crosscheck the bug on, each time it comes past us, the more effort we have to put in. But the fewer we check, the more risk we are taking.

Balancing Tools & Automation

Another example where large numbers of potential test platforms require thoughtful management of effort is when it comes to tools and automation.

Ideally, automation should be able to save us effort across the table above by helping us automate large chunks of tests that can then be run “auto-magically” across multiple platforms, even simultaneously. However, device emulators and simulators are not the real deal and as such they will each have their own quirks and differences that will impact the test results.

For best results and risk mitigation, we should plan a balance of virtual and on-device testing, with a balance of automated and manual testing, using a mix of home-grown, free/open-source, and COTS tools.

Training For Mobile

There is an ever-changing body of knowledge around mobile testing pertaining to the tricks, tools, design requirements, and gotchas for the platforms of today and yesterday.

We need to ensure that our teams are up-to-speed and ready to test mobile applications across a wide-range of platforms, while keeping the test results clean and detailed enough to provide the developers the information they need to efficiently fix the issues. And, of course, the more platforms we have to support, the larger the knowledge base each tester needs to absorb and maintain.

Our test strategy should reference what knowledge is expected to be captured, communicated and maintained outside of our heads, and how.

Conclusion

So it IS about all the devices, but not in the sense that we should try to test everything on as many platforms as we can get our hands on.

Because of the proliferation of devices and operating systems, our test strategy needs to have a “smart” approach for testing our mobile applications to get the maximum return on investment while minimizing risk.