Over the years, I have been involved in a number of projects testing COTS (Commercial-Off-The-Shelf) systems across a range of industries. Sometimes the project was with the vendor and sometimes with the customer. When it came to supporting a company undertaking a COTS system implementation, I always appreciated the benefits that came with a “quality” evaluation.

When such an evaluation is conducted in a thoughtful manner, a lot of ramp-up, preparation, AND testing can be shifted to the left (Ref: New Project? Shift-Left to Start Right!) making the overall selection process that much more likely to find the “best fit” COTS system.

Implementing COTS Systems Costly; Mitigate Your Risks

COTS systems are a common consideration for most enterprise organizations when planning their IT strategy around ERP, CMS, CRM, HRIS, BI, etc. Rarely will an organization build such a substantial software system from scratch if there is a viable alternative.

However, unlike software products that we can just install and start using right out-of-the-box, these COTS systems must typically undergo configuration, customization and/or extension before they will meet the full business needs of the end-user. This can get expensive.

As such, implementation necessarily requires a strong business case to justify the level of investment involved. Anything that impairs the selection and implementation of the best fit COTS system will put that business case at risk.

Earlier involvement of testing can be key to mitigating risk to the business case with respect to the following challenges.

A COTS System is a Very Dark “Black Box”

Having to treat an application as complex as the typical COTS system like a black box is a significant challenge.

When we conduct black box testing for a system that we have built in-house, we have requirements, insights to the architecture and design, and access to the developers’ knowledge of their code. We can get input as to what are the risky areas, and where there is tighter coupling or business logic complexity. We can even ask for testability improvements.

When we are testing COTS systems, we don’t have any of that. The only requirements are the user manuals, the insights come from tidbits gleaned from the vendor and their trainers, and we don’t have access to the developers or even experienced users. It is a much darker black box that conceals significant risk.

Additionally, not all the testing can be done by manually poking around in the GUI. Testing COTS systems involves a great amount of testing how the COTS system communicates with other systems and data sources via its interfaces.

Also, consider the data required. As Virginia Reynolds comments in Managing COTS Test Efforts, In Three Parts, when testing COTS systems “it’s all-data, all the time.” In addition to using data as part of functional and non-functional testing, specific testing of data migration, flow, integrity, and security is critical.

Leaving the majority of testing such a system until late in the implementation process and, possibly, primarily as part of user acceptance by business users, will be very risky to the organization.

Claims Should Be Verified

When we create a piece of software in-house or even if we contract another party to write it for us, we control the code. We can change it, update it, and contract a different 3rd party to extend it if and when we feel like it. With COTS systems, the vendor owns the code and they are always actively working on it. They are continually upgrading and enhancing the software.

As we know from our own testing efforts, there isn’t time to test everything, or to fix everything. That means, the vendor will have made choices and trade-offs with respect to the features and the quality of the system they are selling to us, and all their customers.

Of course, it is reasonable to expect that the vendor will test their core functionality, or the “vanilla” configuration of their system. They would not remain in business long if they did not. But, to depend on the assumption that what the vendor considers to be “quality” is the same as what we consider to be “quality”, is asking for trouble.

“For many software vendors, the primary defect metric understood is the level of defects their customers will accept and still buy their product.” Randall Rice, Testing COTS-Based Applications

Even if we trust the vendor and their claims, remember they are not testing in our specific context, Eg: meeting our functional and quality requirements when the COTS system is configured to our specific business processes and integrated with our application ecosystem. (Ref: To Test or Not to Test?)

Vanilla is Not the Flavour of Your Business

The vendor of the COTS system is not making their product for us, at least not just for us. They are making their system for the market/industry that our business is a part of.

As each customer has their own specific way of doing business, it is very unlikely that we would take a COTS system and implement it straight out-of-the-box in its “vanilla” configuration. And though we may be “in the industry” that the COTS system is intended to provide a solution for, there will always need to be some tweaking and some gluing.

The COTS system will need to be configured, customized and/or extended before it is ready to be used by the business. And, because of the lack of insight and experience with the system, the impact of any such changes will not be well understood – a risk to implementation.

COTS Systems Must “Play Nice”

Testing COTS systems comes in two major pieces; testing the configured COTS system itself, and testing the COTS system together with its upstream and downstream applications.

Many of the business’ work processes will span multiple applications and we need to look for overall system level incompatibilities and competing demands on system resources. Issues related to reliability, performance, and security can often go unnoticed until the overall system is integrated together.

And when there is an issue, it can be very difficult to isolate the source of the error if the problem is resulting from the interaction of two of more applications. The difficulty in isolating any issues is further complicated when the applications involved are COTS systems (black boxes) from different vendors.

“Finding the actual source of the failure – or even re-creating the failure – can be quite complex and time-consuming, especially when the COTS system involves products from multiple vendors.” – Richard Bechtold, Efficient and Effective Testing of Multiple COTS-Intensive Systems

We need to have familiarity with the base COTS system in order to be able to isolate these sorts of integration issues more effectively, and especially to be able to confidently identify where the responsibility lies.

Testing COTS Systems during Evaluation

If there has been an honest effort to “do it right”, then a formal selection process will take place prior to implementation, one that goes beyond reading the different vendors’ websites and sales brochures. And in this case, testing can be involved earlier in the process.

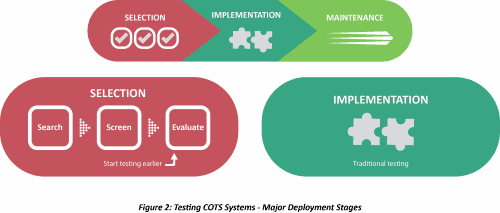

Consider the three big blocks of a COTS deployment: Selection, Implementation, and Maintenance. The implementation phase is traditionally where all the action is, especially from the testing point of view.

But, we don’t want to be struggling in implementation with issues related to the challenges described above. We need to explore the COTS system’s functionality and its limits in the aspects of quality that are important to us before that point. Why find out about usability, performance, security model, and data model issues after selection? After all, moving release dates is usually quite costly.

“The quality of the software that is delivered for a COTS product depends on the supplier’s view of quality. For many vendors, the competition for rushing a new version to market is more important than delivering a high level of software reliability, usability, and other qualities.” – Judith A. Clapp, Audrey E. Taub, A Management Guide to Software Maintenance in COTS-Based Systems

If we get testing started early, we can be ramping up on this large, complex software system, reviewing requirements, documenting our important test cases, finding bugs and other issues, determining test environment and data needs, and identifying upstream and downstream application dependencies all before the big decision is made. Thereby, informing that decision while responsibly preparing for the inevitable implementation.

To realize these and other benefits, we can leverage testing and shift efforts to the left, away from the final deadline. We make testing an integral part of decision-making during evaluation.

We want to choose the right solution the first time with no big surprises after making that choice. This early involvement of testing, done efficiently, can help our implementation go that much more smoothly.

Multiple Streams of Evaluation Testing

When designing a new software system, there are many considerations around what it needs to do and what are the important quality characteristics. This is no different with a COTS system, except that it is already built. That functionality and those quality characteristics are already embedded in the system.

It would be great if there was a system that perfectly fit our needs right out-of-the-box, functionally and quality-wise. But that won’t be the case. The software was not built for us. There will be things about it that fit and don’t fit, things that we like and don’t like, and things that will be missing. This applies to our fit with the vendor as well.

Our evaluation must take the list of candidates that passed the non-technical screening and rapidly get to the point where we can say: “Yes, this is the best choice for us. This is the one we want to put effort into making work.”

In order to do that, we will need to:

-

- Confirm vendor claims in terms of functionality, interfaces for up/down stream applications and DW/BI systems, configurability, compatibility, reporting, etc

- Confirm suitability of the data model, the security model, and data security

- Confirm compatibility with the overall system environment and dependent applications

- Investigate the limits of quality in terms of the quality characteristics that are key to our business and users (Eg: reliability, usability, performance, etc.)

- Uncover bugs, undocumented features, and others issues in areas of the system that are business critical, popular/frequently used, and/or have complex/involved business processes

The evaluation will also need to include more than just the COTS system. The vendor should be evaluated on such things as organizational maturity, financial stability, customer service/support, quality of training/documentation, etc.

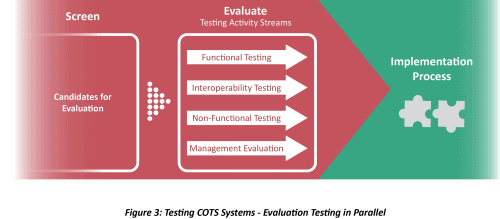

To do all of this efficiently, we can organize our evaluation testing into four streams of activity that we can execute in parallel, giving us a COTS selection process that can be illustrated at the high-level as follows:

As adapted from Timing the Testing of COTS Software Products, the streams of evaluation testing would focus on the following:

-

- Functional Testing: the COTS systems are tested in isolation to learn and confirm the functional capabilities being provided by each candidate

- Interoperability Testing: the COTS systems are tested to determine which candidate will best be able to co-exist in the overall application ecosystem

- Non-Functional Testing: the COTS systems are tested to provide a quantitative assessment of the degree to which each candidate meets our requirements around the aspects of quality that are important to us

- Management Evaluation: the COTS systems are evaluated on their less tangible aspects including such things as training, costs, vendor capability, etc.

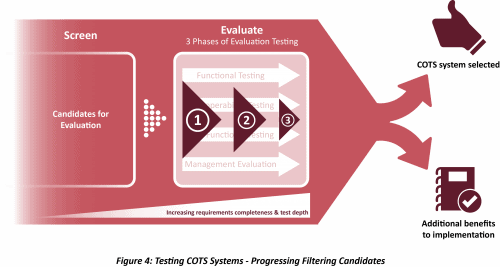

Caveat: We don’t want to test each system to the same extent. We want to eliminate candidate COTS systems as rapidly as possible.

Rapidly Narrowing the Field

In order to eliminate candidate COTS systems as rapidly and efficiently as possible, we need a progressive filtering approach to applying the selection criteria. This approach will also ensure that the effort put into evaluating the candidate COTS systems is minimized overall.

Additionally, the requirements gathering and detailing can be conducted in a just-in-time (JIT) manner over the course of the entire selection phase rather than as a big bang effort at the beginning of implementation.

As an example, we could organize this progressive filtering approach into three phases or levels:

Testing would scale up over the course of the three phases of evaluation, increasing in coverage, complexity, and formality as the number of systems being evaluated reduces.

The best fit COTS system will be more confidently identified, and a number of important benefits generated, in the course of this process.

Testing with Benefits

With our efficient approach to involving testing during evaluation, we will not only be able to rapidly select the best option for the specific context of our company, but we will also be able to leverage the following additional benefits from our investment, as we move forward into implementation:

-

- Requirements Captured: Requirements have been captured from the business and architecture, reviewed, and tested against

- Stronger Fit-Gap Analysis: Missing functionality has been identified for inputting to implementation planning

- Test Team Trained: The test team is trained up on the chosen COTS system and has practical experience testing it

- Quality Baseline Established: Base aspects of the COTS system have already been tested, establishing a quality baseline

- Development Prototypes Tested: Prototypes of “glue” code to interact with the interfaces and/or simulate other applications and ETL scripts for data migration have been developed, and have been tested

- Test Artifacts Created: Reusable test artifacts, including test data, automated test drivers, and automated data loaders are retained for implementation testing

- Test Infrastructure Identified: Needs around tools, infrastructure and data for testing have been enumerated for inputting to implementation planning

- Bug Fixing: Bugs, undocumented features, and other issues related to the COTS system have been found and raised to the vendor prior to signing on the dotted line

Conclusion

In addition to uncovering issues early, involving testing during evaluation will establish a baseline of expected functional capability and overall quality before any customization and integration. This will be of great help when trying to isolate issues that come up in implementation.

“Vendors are much more likely to address customer concerns with missing or incomplete functionality as well as bugs in the software before they sign on the dotted line.” – Arlene Minkiewicz, 6 Steps to a Successful COTS Implementation

Most important of all, after this testing during evaluation, the implementation project can more reasonably be considered an enhancement of an existing system that we are now already familiar with. Therefore, we can more confidently focus our testing during implementation on where changes are made when configuring, customizing, extending, and integrating the COTS system, mitigating the risks associated specifically with those changes, while having confidence that the larger system has already been evaluated from a quality point of view.

With less surprises and problems during implementation, we should end up having to do less testing overall.

“The success of the entire development depends on an accurate understanding of the capabilities and limitations of the individual COTS. This dependency can be quantified by implementing a test suite that uncovers interoperability problems, as well as highlighting individual characteristics. These tests represent a formal evaluation of the COTS capabilities and, when considered within the overall system context can represent a major portion of subsystem testing.” – John C. Dean, Timing the Testing of COTS Software Products

With an approach such as this, we should be able to reduce candidate COTS system options faster, achieve a closer match to our needs, know earlier about fit-gaps and risks, capture our requirements more timely and completely, and spread out the demands on testing resources and environments – all of which should help us achieve a faster deployment and a more successful project.

Choose your COTS system wisely and you’ll save time and money…Make your evaluation count.