It is common for testing to be given constraints around schedule, budget, team members/skills, and even tools.

So, when you are asked to step out of this box and propose what you think is needed for the next project, a few responses might come to mind:

- [Relief] Finally! I am going to get more people, better tools, and we are going to do this thing right!

- [Defensive] What we did last time worked fine, didn’t it? Didn’t it?

- [Cynical] You are just going to cut whatever number I give you by 30% while holding me to my original effectiveness targets.

- [Disbelief] Is this a trick? You really want to know?

Or, depending on the company and its philosophy toward quality, you may experience the opposite; every project you are being asked to improve, to be better than last time.’

In either case, you are being asked to stand and deliver: increase testing capability and coverage, reduce turnaround time, find those important defects earlier, etc.

“Good Enough” Testing?

When do we stop testing?

When quality is good enough.

So…When do we stop testing?

Let’s assume that “good enough” quality can be interpreted as “sufficiently valuable or fit-for-use”.

The project wants to reach this goal for its system or product as quickly and as cost efficiently as possible. How can testing help?

Let’s start with:

- Define quantifiable quality criteria for the project

- Capture the risk tolerance for the project

- Provide flexibility around skills/techniques/tools

- Avoid a one-size-fits-all approach to testing by providing options

Also, we can work at getting stakeholders to believe that:

- An upfront investment in testing for a project can actually pay off within that project lifecycle

- A larger test effort doesn’t automatically bring an appreciable benefit for the added cost

- Cutting or squeezing testing does not ultimately save time or money

- A critical project is a good place to try new things (after all, aren’t they all critical to someone?)

Option A – I Choose You?

Given that each project is unique and has its own needs in terms of quality, we need to design a custom approach to testing by:

- Establishing a stakeholder agreed on definition of what is quality for your project

- Identifying and analyzing your risks, mapping testing activities where possible

- Proposing a business case for each test strategy option (eg: What do I get for that price?)

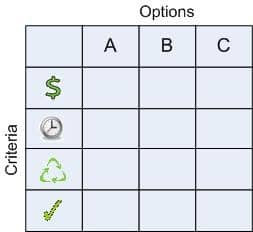

When you create your options, start by considering the basic three: A-Light (quick and dirty), B-Balanced (thoughtful), and C-Heavy (overkill?).

And, in each option:

- Map the risks to be addressed/evaluated by testing to test activities (and vice versa)

- Estimate effort, cost, schedule, future value, confidence/effectiveness, etc

- Solicit and incorporate feedback

But most of all think about where the “smart” is, in each of your options.

Two Sides to Being Smart

Are you being smart with me?

Rather that than the alternative…

You’re doing it again!

One of the crucial components to being “smart” in a constrained situation is to be able to correctly decide or select which things are “must-haves”, which are “should-haves”, and which are “nice-to-haves”.

How you sort these things depends a lot on what is your appetite for risk and what you are willing to pay. To make the trade-offs clear to stakeholders, we can create options for testing as business cases and place them on the Total Cost of Quality curve.

Another dimension to “smart” is innovating in order to shift the quality curve to the right, thereby maintaining or reducing the overall cost while increasing quality.

Examples:

- A test harness to drive a trillion+ transactions through an underlying sub-system where the data is generated on the fly from a set of transaction schema templates and situational test instruction sets (How else would you do it? Through the GUI?)

- Smoke test automation creates the seed data that the manual testers will use for further system testing

- Strategically managing the regression test suite of a mature product such that the overall volume of tests to be executed grows only marginally for each release (or not at all)

- Happy-path data entry is automated for each form via a “quick loader” button on the screen such that the tester can use the data as-is or manually tweak it as needed before moving to the next screen in the test scenario

- Model-driven testing technique (+ tool) is used in complex areas of the system to extract the minimal set of test scenarios needed for maximum coverage

With “smart options” based on quality criteria, risks, and project constraints in front of stakeholders, useful discussion is possible. Choices can be made with awareness of the implications in terms of trade-offs or opportunity costs.

Note: In creating your options, you are really trying to find the “right-fit” option. Therefore, any initial option can be “looted” for pieces to be merged with others on the way to creating that final, agreed, right-fit approach.

The Smart-Fit is the Right-Fit

It is a very human tendency not to change until it becomes more painful to continue with the status quo than to finally make the needed change. Then of course, something has to be done, and fast.

“Change before you have to.” – Jack Welch

Don’t wait to be asked to change. Instead, let’s be steadily evolving and ready to take the next step at each opportunity, asked for or not, by:

- Assembling each testing approach option as a business case

- Adding “smart” by drawing from your backlog of testing improvement ideas

- Adjusting and converging to the chosen approach

Et voilà! Today’s testing approach will meet the needs of today’s project, and in a more efficient and effective way than yesterday’s – The smart(est)-fit for the right now.

For related reading, check out these articles on thinktesting.com: